Selected ongoing projects:

ReconCell aims at designing and implementing a new kind of an autonomous robot workcell, which will be attractive not only for large production lines but also for few-of-a-kind production, which often takes place in SMEs. The proposed workcell is based on novel ICT technologies for programming, monitoring and executing assembly operations in an autonomous way. It can be nearly automatically reconfigured to execute new assembly tasks efficiently, precisely, and economically with a minimum amount of human intervention. This approach is backed up by a rigorous business-case analysis, which shows that the ReconCell system is economically viable also for SMEs.

ReconCell is part of the EU initiative I4MS (ICT Innovation for Manufacturing SMEs).

Current practice is such that production systems are designed and optimized to execute the exact same process over and over again. However, the planning and control of production systems has become increasingly complex regarding flexibility and productivity, as well as the decreasing predictability of processes. The full potential of open CPS has yet to be fully realized in the context of cognitive autonomous production systems.

A reconfigurable robot workcell can be used to integrate, customize, test, validate and demonstrate AUTOWARE innovations, prior to the end-user implementation and market launch, in the context of robotics and automation. Experiments will be performed in the area of human robot collaboration, since the proposed workcell includes robots that are safe for collaboration with humans. The aim is to show that robots can be used to augment the capabilities of human workers, freeing them to do what humans are good at; dexterity and flexibility rather than repeatability and high precision.

Most of the tasks performed by humans require coordinated motion of both arms. Dual-arm robotic systems are therefore needed if the robots are to effectively cooperate with human workers or even replace them in production processes. The advantage of dualarm anthropomorphic robots is that they can work with humans without major redesigns of the task or workplace. Bimanual manipulation is also the key feature to more advanced humanoid and service robots, which are expected act and some problems in ways similar to humans. Lately several major manufacturers of industrial robots have started to develop dual arm systems. However, to effectively exploit dualarm robotic systems in industry and services, it is necessary to develop efficient control, learning and adaptation methods, which are currently still not available in industrial and service robotics.

The proposed project deals with these opened questions by addressing the following issues:

- Development of new efficient methods for learning and demonstration of bimanual tasks. Two modes will be considered: kinesthetic guiding and demonstration using haptic devices. Within this approach, besides position and orientation trajectories, also force and torque profiles will need to be captured.

- Development of new algorithms for efficient and autonomous adaptation of bimanual coordinated tasks to deviations, which are induced by inaccurate grasping, deviations in workpiece geometry and inaccurately demonstrated trajectories.

- Verifications of the developed methods and algorithms on some typical industrial and domestic tasks that involve automated bimanual assembly. The following three tasks will be analyzed in detail: bimanual peg in hole, bimanual assembly of an automotive light and bimanual glass wiping.

Past projects:

Current research in enactive, embodied cognition is built on two central ideas: 1) Physical interaction with and exploration of the world allows an agent to acquire and extend intrinsically grounded, cognitive representations and, 2) representations built from such interactions are much better adapted to guiding behaviour than human crafted rules or control logic. Exploration and discriminative learning, however are relatively slow processes. Humans, on the other hand, are able to rapidly create new concepts and react to unanticipated situations using their experience. “Imagining” and “internal simulation”, hence generative mechanisms which rely on prior knowledge are employed to predict the immediate future and are key in increasing bandwidth and speed of cognitive development. Current artificial cognitive systems are limited in this respect as they do not yet make efficient use of such generative mechanisms for the extension of their cognitive properties.

The Xperience project will address this problem by structural bootstrapping, an idea taken from child language acquisition research. Structural bootstrapping is a method of building generative models, leveraging existing experience to predict unexplored action effects and to focus the hypothesis space for learning novel concepts. This developmental approach enables rapid generalization and acquisition of new knowledge and skills from little additional training data. Moreover, thanks to shared concepts, structural bootstrapping enables enactive agents to communicate effectively with each other and with humans. Structural bootstrapping can be employed at all levels of cognitive development (e.g. sensorimotor, planning, communication).

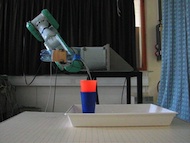

ACAT focuses on the problem how artificial systems (robots) can understand and utilize information made for humans. For this, ACAT generates a dynamic process memory by extraction and storage of action categories from large bodies of human compatible sources (text, images). Action categories are designed to include the actual action-encoding but also large amounts of context information ("background"). They are obtained by combining linguistic analysis with grounded exploration and action-simulation and are assembled in the action-specific knowledge base of ACAT. The ACAT system then uses action-categories to compile robot-executable plans. Execution benefits strongly from the rich context information present in the action-categories because this allows for generalization (for example replacement of objects in an action). It also permits us to specifically address ambiguity, incompleteness and uncertainty in planning. Plans are grounded by perception and execution, which takes place by a robot. This leads to a life-long update process of the knowledge base.

The ultimate purpose of ACAT is to equip the robot – on an ongoing basis – with abstract, functional knowledge, normally made for humans, about relations between actions and objects leading to a system which can act meaningfully. As industrially relevant scenario, ACAT uses "instruction sheets" (manuals), made for human workers, and translates these into a robot-executable format. This way the robot will be able to partially take over human tasks without time-consuming programming procedures. Similar to computer science, where the development of the first compilers had led to a major step forward, the main impact of this project is that ACAT develops a robot-compiler, which translates human understandable information into a robot-executable program.

IntellAct addresses the problem of understanding and exploiting the meaning (semantics) of manipulations in terms of objects, actions and their consequences for reproducing human actions with machines. This is in particular required for the interaction between humans and robots in which the robot has to understand the human action and then to transfer it to its own embodiment. IntellAct will provide means to allow for this transfer not by copying movements of the human but by transferring the human action on a semantic level. IntellAct consists of three building blocks:

• Learning: Abstract, semantic descriptions of manipulations are extracted from video sequences showing a human demonstrating the manipulations;

• Monitoring: In the second step, observed manipulations are evaluated against the learned, semantic models;

• Execution: Based on learned, semantic models, equivalent manipulations are executed by a robot.

The analysis of low-level observation data for semantic content (Learning) and the synthesis of concrete behaviour (Execution) constitute the major scientific challenge of IntellAct. Based on the semantic interpretation and description and enhanced with low-level trajectory data for grounding, two major application areas are addressed by IntellAct: First, the monitoring of human manipulations for correctness (e.g., for training or in high-risk scenarios) and second, the efficient teaching of cognitive robots to perform manipulations in a wide variety of applications. To achieve these goals, IntellAct brings together recent methods for:

• Parsing scenes into spatio-temporal graphs and so-called “Semantic Event Chains‟.

• Probabilistic models of objects and their manipulation.

• Probabilistic rule learning.

• Dynamic motion primitives for trainable and flexible descriptions of robotic motor behaviour.

Learning, analysis, and detection of motion in the framework of a hierarchical compositional visual architecture, Slovenian research agency project

Scientific advances in the recent years, especially in the field of neuroscience, have provided us with inspiration and insights that have given rise to novel approaches in computer vision. Far from duplicating the functionality of the human brain, they aim to improve the performance of computer vision methods by utilizing a selection of biologically inspired design principles. One such principle is the concept of hierarchical compositionality, which has been already exploited in the design of stateoftheart object categorization methods, with significant contributions from the proposers of this project. Compared to the other state of the art approaches, the approaches based on hierarchical compositionality allow for much more efficient use of the existing resources. This is achieved through sharing of both the representation units and the computations, and by transfer of the knowledge, therefore making the learning process much more efficient.

The proposed project aims at a holistic approach towards learning, detection and recognition / categorisation of the visual motion and the phenomena derived from it. The approach is based on a novel and powerful paradigm of learning multilayer compositional hierarchies. While individual ingredients, such as the hierarchical processing, compositionality and incremental learning, have already been subjects of a research, they have, to the best of our knowledge, never been treated in a unified motionrelated framework. Such a framework is crucial for robustness, versatility, ease of learning and inference, generalisation, realtime performance, transfer of the knowledge, and scalability for a variety of cognitive vision tasks.