Aim

Computer vision is crucial for achieving adaptive, flexible, and robust robotics. In recent years we have witnessed a significant progress in the areas such as 3D object and scene acquisition and modeling, real-time processing and visual learning. In particular, statistical approaches stemming from machine learning have led to the development of many impressive vision systems, whose performance can, in some cases, even surpass human capabilities. However, despite this significant progress, the success stories are manly limited to specific tasks and scenes. General-purpose vision, as needed by robots to operate in ever changing natural environments and interact with people, remains elusive. What is needed is a development of representations and learning mechanisms that provide capabilities to acquire new visual models and procedures in an incremental (developmental), open-ended way, grounded in the experiences of the robot acting in its working environment. Active robot exploration can provide new data, which is highly relevant for expanding the robot’s capabilities. However, the solutions how to achieve sharable representations and scalable mechanisms to enable visual learning from a small amount of data, remain vital for the overall success.

Workshop Program

| 09:00 - 09:05 | Welcome |

| 09:05 - 09:35 | Norbert Krüger, University of Southern Denmark, Odense Hierarchical structure of the human visual system |

| 09:35 - 10:05 | Silvio Sabatini, University of Genoa Deep representation hierarchies for 3D active vision |

| 10:05 - 10:35 | Aleš Leonardis, University of Birmingham Compositional hierarchies for scalable robot vision |

| 10:35 - 10:55 | Coffee break |

| 10:55 - 11:25 | Michael Zillich, Technical University Vienna Perceptual grouping in RGB-D for semantically rich visual representations |

| 11:25 - 11:55 | Tomohiro Shibata, Kyushu Institute of Technology, Kitakyushu A hybrid direct feature matching based on-line loop-closure detection algorithm for topological mapping |

| 11:55 - 12:25 | Mario Fritz, Max Planck Institute for Informatics, Saarbrücken Scalable learning and perception |

| 12:25 - 14:00 | Lunch break |

| 14:00 - 14:30 | Francesca Odone, University of Genoa Teaching iCub to see its world |

| 14:30 - 15:00 | Aaron Bobick, Georgia Tech, Atlanta Structured representations of human robot collaborative action |

| 15:00 - 15:30 | Tamim Asfour, Karslruhe Institute of Technology Active visual perception for humanoid robots |

| 15:30 - 15:50 | Coffee break |

| 15:50 - 16:20 | Kostas Daniilidis, University of Pennsylvania, Philadelphia Active vision revisited |

| 16:20 - 16:50 | Manuel Lopes, INRIA, Bordeaux Learning Tasks and Representations from Human-Machine Interactions |

| 16:50 - 17:10 | Aleš Ude, Jožef Stefan Institute, Ljubljana Active visual learning on a humanoid robot |

| 17:10 - 17:30 | Discussion |

The program can also be downloaded as pdf.

Main Topics

- Scalable visual representations

- Hierarchical visual representations in robotics

- Hierarchical architectures in human vision

- Visual learning by active manipulation

- Visual learning on mobile robots

- Open-ended statistical learning of visual representations

- Developmental approaches to visual learning on robots

- Learning of visual representations for robot manipulation

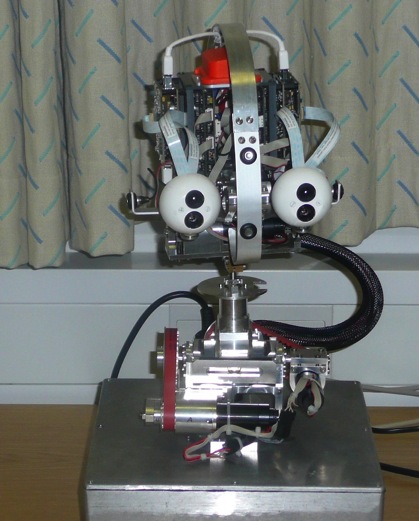

- Active vision systems and humanoid heads

- Visual attention / curiosity

Organizers- Aleš Ude, Jožef Stefan Institute, Dept. of Automatics, Biocybernetics, and Robotics, Humanoid and Cognitive Robotics Lab, Slovenia

- Aleš Leonardis, University of Birmingham, School of Computer Science, Intelligent Robotics Lab, UK

- Kostas Daniilidis, University of Pennsylvania, GRASP Laboratory, Philadelphia, USA

- Tamim Asfour, Karlsruhe Institute of Technology, Institute for Anthropomatics, Germany

Time and Place

The workshop will take place on May 31st, 2014, as part of the workshop programme of IEEE International Conference on Robotics and Automation (ICRA), which will be held in Hong Kong, China.

Support

This workshop is supported by

and the IEEE Robotics and Automation Society technical committees

- IEEE-RAS Technical committee on Computer & Robot Vision,

- IEEE-RAS Technical committee on Mobile Manipulation, and

- IEEE-RAS Technical committee on Humanoid Robots.

Time and Place

Support